Oleksandr Tomchuk, Triplegangers’ CEO, found his web platform paralyzed over the weekend. The OpenAI bot, shockingly, was to blame after what resembled a distributed denial-of-service (DDoS) incursion jammed the company’s considerable site.

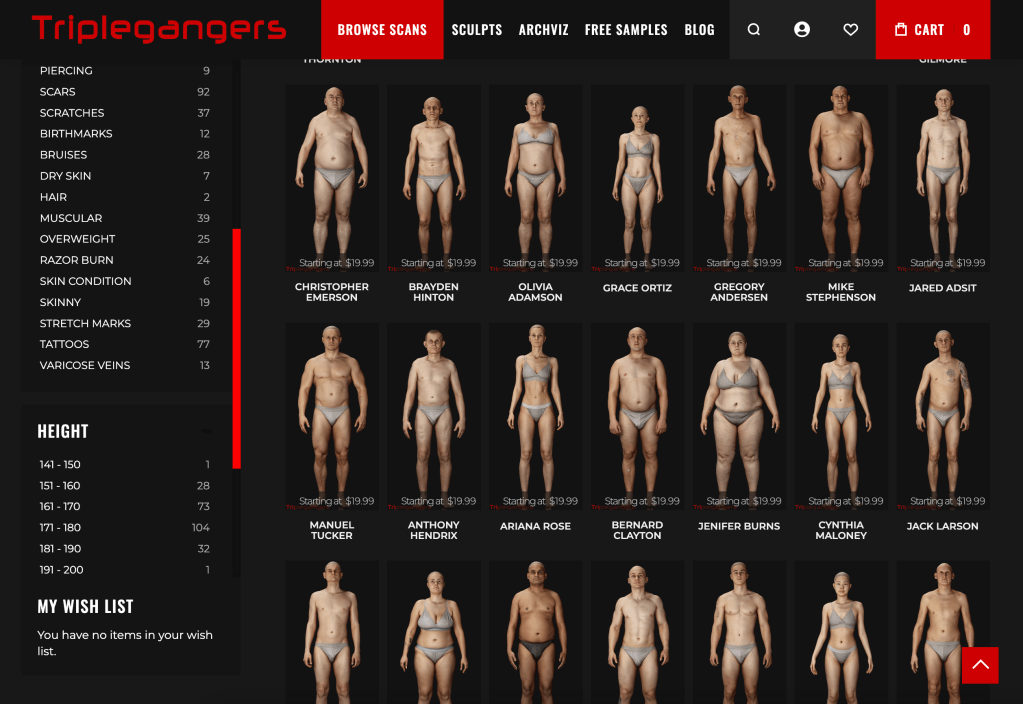

With an extensive catalogue of over 65,000 products, each holding at least three photos, OpenAI’s bot tirelessly hounded the site, barraging its servers with “tens of thousands” of requests for large-scale content download.

What’s more, over 600 IP addresses from OpenAI swarmed Triplegangers’ platform, a figure that could grow pending further log analysis. “OpenAI’s crawlers were smothering our site, it felt like a DDoS assault,” remarked Tomchuk.

Triplegangers’ web platform is their primary business model. The 3D image files, representing “human digital doubles,” are sold to 3D artists, video game developers, and others requiring authentic human features. Despite having a well-defined terms of service prohibiting unauthorized data scraping, their site suffered due to a misconfigured robot.txt file which failed to ward off the voracious OpenAI bot, GPTBot.

Robot.txt, or the Robots Exclusion Protocol, dictates to search engines what content to avoid while crawling. Without properly utilizing this, sites fall prey to unrestricted data scraping.

Adding fuel to the fire, as the site crumbled during U.S. working hours, skyrocketing AWS bills stemmed from the bot’s heavy CPU usage and downloading activities.

## Regaining Control: A Challenging Task

On Wednesday, equipped with a correctly configured robot.txt file and a Cloudflare account, Tomchuk managed to block the GPTBot, among others. Their site remained online, however, the ordeal brought to light alarming points of concern.

Firstly, there was uncertainty about what the OpenAI bot took. Secondly, there was a lack of channels to contact OpenAI to rectify the issue. Lastly, Triplegangers deals with rights related to real human images – a sensitive aspect when GDPR is in play.

Moreover, the incident illuminated key loopholes. The burden of blocking such data scraping rests on the business owner who must understand how to configure their bots.

## Lessons Learned

Tomchuk urges other small businesses to keep an eye out for potential AI bot threats that might capitalize on their copyrighted assets. According to DoubleVerify, an advertising company, there was an 86% surge in “general invalid traffic” in 2024 due to these AI crawlers and scrapers.

The incident was a wake-up call, highlighting the necessity of regular log monitoring to catch ill-intended bots at work. AI bots must operate under a permission-first protocol instead of randomly scraping data, Tomchuk suggests.

Original source: Read the full article on TechCrunch