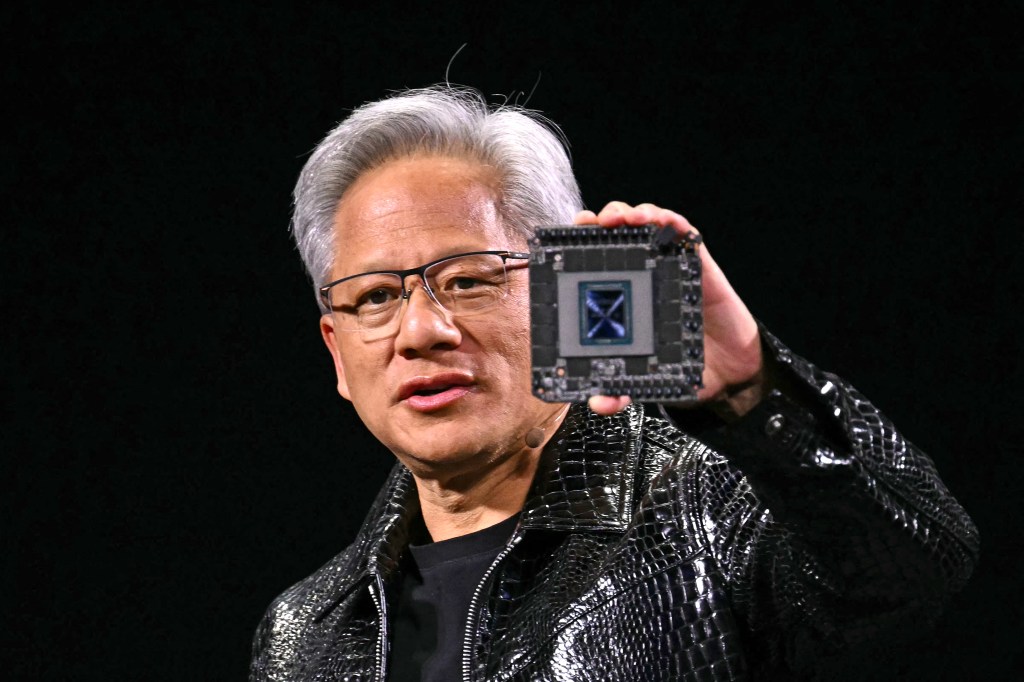

Nvidia’s CEO, Jensen Huang, asserts that their AI chips’ development is outpacing the historic pace set by Moore’s Law, the guiding principle that has propelled computational progress for years.

During a recent TechCrunch interview, Huang stated, “Our systems are advancing much faster than Moore’s Law.” According to Moore’s Law, the number of transistors on computer chips should double approximately every two years, doubling their performance. The law has held true for decades, leading to dramatic technological leaps and reduced costs.

Though the pace of Moore’s Law has decelerated in recent years, Nvidia’s AI chips claim to be setting their pace. Nvidia’s latest data center superchip is noted for being over 30x swifter at processing AI inference workloads than the preceding variant.

Huang argues that their continued innovation across all dimensions—architecture, chip, system, libraries, and algorithms—enables them to overtake Moore’s Law. Amid growing skepticism regarding stagnating AI progress, Huang’s assertion offers a glimmer of hope. Various leading AI labs employ Nvidia’s AI chips, suggesting that improvements in these chips could catalyze further enhancements in AI capabilities.

Huang debunks notions of stalled AI progress, proposing the existence of three active AI scaling laws that center on pre-training, post-training, and test-time compute. He foresees a similar cost reduction curve for inference, driving up performance while driving down costs.

However, some tech companies, focusing increasingly on inference, question the continued dominance of Nvidia’s costly chips. Huang counters by showing off Nvidia’s latest data center superchip, the GB200 NVL72. It is considerably faster than its predecessor, the H100, making resource-intensive AI reasoning models more affordable over time.

Huang forecasts that the price of AI models will continue to plummet owing to technological breakthroughs like the ones they are making at Nvidia. The company is gearing up for a long-term commitment to developing better-performing chips. Huang expects this performance enhancement to be a significant catalyst in reducing the cost of AI models.

Original source: Read the full article on TechCrunch